This lesson requires a premium membership to access.

Premium membership includes unlimited access to all courses, quizzes, downloadable resources, and future content updates.

Ask questions about this lesson and get instant answers.

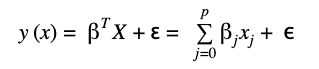

The multiple linear regression algorithm states that a response y can be estimated with a set of input features x and an error term ɛ. The model can be expressed with the following mathematical equation:

βT_X_ is the matrix notation of the equation, where βT, X ϵ ʀp+1 and ɛ ~ N(μ,σ2)